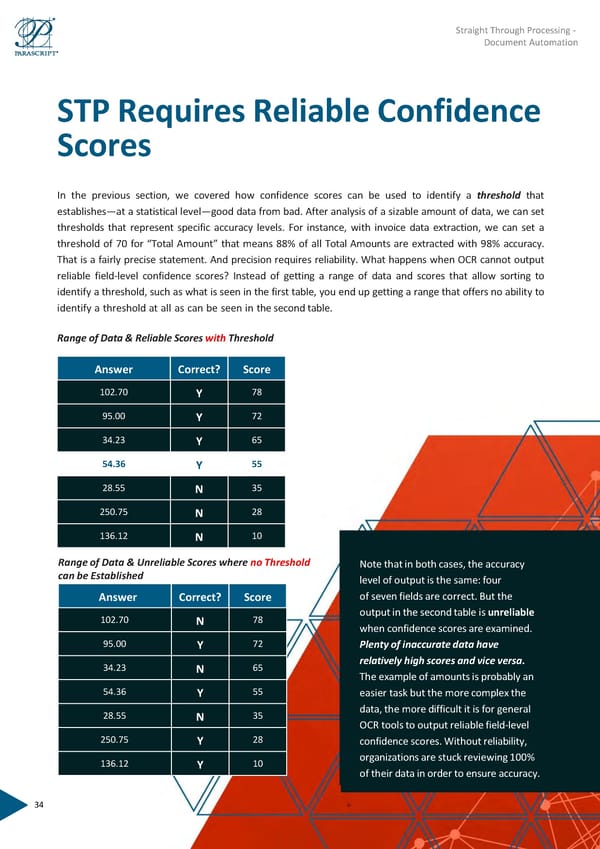

Straight Through Processing - Document Automation STP Requires ReliableConfidence Scores In the previous section, we covered how confidence scores can be used to identify a threshold that establishes—at a statistical level—good data from bad. After analysis of a sizable amount of data, we can set thresholds that represent specific accuracy levels. For instance, with invoice data extraction, we can set a threshold of 70 for “Total Amount” that means 88% of all Total Amounts are extracted with 98% accuracy. That is a fairly precise statement. And precision requires reliability. What happens when OCR cannot output reliable field-level confidence scores? Instead of getting a range of data and scores that allow sorting to identify a threshold, such as what is seen in the first table, you end up getting a range that offers no ability to identify a threshold at all as can be seen in the secondtable. RangeofData&ReliableScoreswithThreshold Answer Correct? Score 102.70 Y 78 95.00 Y 72 34.23 Y 65 54.36 Y 55 28.55 N 35 250.75 N 28 136.12 N 10 Range of Data & Unreliable Scores where no Threshold Note that in both cases, the accuracy can be Established level of output is the same:four Answer Correct? Score of seven fields are correct. But the 102.70 N 78 output in the second table is unreliable whenconfidence scores are examined. 95.00 Y 72 Plentyof inaccurate data have 34.23 N 65 relatively highscores and vice versa. The example ofamounts is probably an 54.36 Y 55 easier task but the more complex the 28.55 N 35 data, the moredifficult it is for general OCR tools tooutput reliable field-level 250.75 Y 28 confidencescores. Without reliability, 136.12 Y 10 organizationsare stuck reviewing 100% of theirdatain order to ensure accuracy. 34

Straight Through Processing for Document Automation Page 33 Page 35

Straight Through Processing for Document Automation Page 33 Page 35