Intel Corporate Responsibility Report

Corporate Responsibility Report 2021-22

Our Business Responsible Inclusive Sustainable Enabling Appendix 2 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction Table of Contents Introduction 2 Table of Contents 2 Letter From Our CEO 3 Letter From Our CPO 4 A Year in Review 5 IRTI: Going Beyond COVID-19 6 Corporate Responsibility 7 and Our 2030 RISE Strategy Awards and Recognitions 11 Our Business 12 Company Profile 13 Our People and Culture 20 Governance, Ethics, and Public Policy 24 Stakeholder Engagement 28 Respecting Human Rights 29 Supply Chain Responsibility 32 Responsible 35 Responsible: Our Approach 36 Employee Health, Safety, and Wellness 37 Protecting Human Rights in the Supply Chain 40 Responsible Minerals Sourcing 42 Responsible Mobility 44 Revolutionizing Health 45 and Safety Through Technology Inclusive 47 Inclusive: Our Approach 48 Inclusive Workforce 49 Social Equity 56 Building a Diverse Technology Industry 58 Supplier Diversity and Inclusion 59 Making Technology Fully Inclusive 61 and Expanding Digital Readiness Sustainable 64 Sustainable: Our Approach 65 Environmental Management 66 Climate and Energy 68 Product Energy Efficiency 71 Water Stewardship 72 Waste and Circular Economy Solutions 74 Sustainable Manufacturing 76 and Chemistry Initiatives Achieving Carbon Neutral Computing 77 Enabling 79 Enabling: Our Approach 80 Employees Changing the World 81 Intel Foundation: Rising to the Future 84 Collaborating for Technology Impact 85 Appendix 87 About this Report 88 Independent Limited Assurance Statement 89 Performance Data Summary 90 SASB and TCFD Framework Alignment 91 UN Sustainable Development Goals 94 Non-GAAP Financial Measures 95 Intel 2021 Water Inventory by Location and Source 96 2021 Environmental, Health, and Safety Violations 98 Top 100 Production and Service Suppliers 99 Acronym Index 100 Our Commitment to Transparency This report provides a comprehensive summary of our approach to corporate responsibility and our performance for calendar and fiscal 2021, unless otherwise stated. This report has been prepared in accordance with the Global Reporting Initiative (GRI) Standards: Comprehensive option. Our GRI Content Index is provided on our Report Builder website. We also use other recognized frameworks to inform the content of this report, including the United Nations (UN) Global Compact, UN Sustainable Development Goals, the Task Force on Climate-Related Financial Disclosures (TCFD), and the Value Reporting Foundation . 1 In 2021, we continued to advance our integrated reporting strategy to include environ - mental, social, and governance information in our 2021 Annual Report on Form 10-K and 2022 Proxy Statement , available on our Investor Relations website. For additional information on Intel’s approach to corporate responsibility, supporting documents and data, past reports, and to customize a report with the sections of your choice, visit our Corporate Responsibility and Report Builder websites. 1 SASB and IIRC announced the finalization of their merger in June 2021, creating a unified organization, the Value Reporting Foundation.

Our Business Responsible Inclusive Sustainable Enabling Appendix 3 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction Letter From Our CEO At Intel, our purpose is to build world-changing technology that improves the life of every person on the planet. We strive every day to make a positive global impact and be good corporate citizens. Increasingly central to every aspect of human existence, technology is transforming our world at an accelerated pace. And at the heart of that technology: semiconductors. Semi - conductors are the foundation of all innovation as the world becomes more digital, from powering our ability to work remotely, to staying connected with friends and family, to providing enhanced healthcare and autonomous vehicles. Today’s challenges are many and immense. We are still struggling with a global pandemic, social injustice, wars, the detrimental effects of climate change, and so much more. Against this global landscape, it is imperative that we work together as an industry to harness the power of technology and ensure it is being used as a force for good. I am extremely proud of Intel’s long-standing leadership in corporate respon - sibility and sustainability, including initiatives in diversity and inclusion, education, and philanthropy. Our focus helps us con - tribute responsible, inclusive, and sustainable practices across our operations, products, and supply chain. The passion and expertise of our employees, energized by our purpose, are accelerating our collective contributions to the world. This Corporate Responsibility Report outlines our progress on the 2030 goals we laid out two years ago. I am proud of our accomplishments, including: • Continuing to invest in conservation projects and set new targets. Building on our long history of leadership in this space, we are driving reductions in greenhouse gas emissions, energy use, water use, and waste generation. For example, we achieved net positive water in three countries, sustained 80% renewable electricity globally, and sent about 5% of our total waste to landfill. This work has contributed to us being recognized as No. 1 on Barron’s 100 Most Sustainable Companies list. We are also continuing our work on sustainable chemistry, including ongoing efforts to refine the chemical footprint methodology for use in evaluation of the chemical supply chain. • Accelerating efforts on the Alliance for Global Inclusion. Last year, we launched a coalition of technology and adja - cent industry partners to improve diversity and inclusion practices and promote transparent reporting in four critical areas: leadership representation, inclusive language, inclusive product development, and STEM readiness in underserved communities. We more than doubled mem - bership and made meaningful progress on creating an inclusion index to help companies track diversity and inclusion improvements against a shared benchmark. • Evolving our Pandemic Response Technology Initiative (PRTI). This has now become our Intel RISE Technology Initiative (IRTI), which funds projects related to healthcare, education, and the economy, with dedicated work streams for social equity and human rights, accessibility, and climate action. The IRTI is a purpose-driven platform for action backed by a $20 million commitment. • Announcing the AI Incubator Network. This is an initiative in partnership with Dell Technologies and the American Association of Community Colleges. As part of Intel’s AI for Workforce program, the AI Incubator Network will design and build AI labs across the US by leveraging the expertise and industry connections of America’s community college system. Since launching the AI for Workforce program in 2020, Intel has expanded it to 31 schools across 18 states with a goal of reaching all 50 states by 2023. As we look ahead, we will build on this momentum to drive progress and take us to even greater heights in 2022 and in the years ahead, including: • Committing to net-zero greenhouse gas emissions across our operations by 2040. We will do this even as we signifi - cantly expand our global operations. We are committed to increasing the energy efficiency and lowering the carbon footprint of Intel products and platforms, and to working with customers and industry partners to create solutions that lower the greenhouse gas footprint of the entire technology ecosystem. Furthermore, through sustainable manufacturing practices and aggressive environmental targets, we are deeply committed to being good neighbors in the communities where we operate. • Making a $100 million investment to establish education and research collaborations. Working with the US National Science Foundation, we will continue to boost research and workforce development in semiconductor manufacturing through a $100 million investment over the next decade to establish education and research collaborations with universities, community colleges, and technical educators in Ohio and across the nation. • Maximizing existing talent while building a diverse talent pipeline across our industry. Despite talent leaving the workforce in the wake of COVID-19 and drops in higher education enrollment, our 2030 goals remain unchanged. We will work to maximize existing talent while building a diverse talent pipeline across our industry. In addition, we have set a 2022 target that our technical hiring in entry- level roles is at least 30% women. • Driving positive impact in supply chain responsibility. Through our efforts to help protect human rights, our suppliers have returned $25 million in fees to their workers since 2014. In 2021, our progress also included expansion of our work in responsible minerals sourcing, and the achievement of $1.4 billion in annual spending with diverse- owned suppliers. We have made incredible strides over the past year, but there is still much to do. We are just getting started. Pat Gelsinger, Chief Executive Officer, Intel Corporation

Our Business Responsible Inclusive Sustainable Enabling Appendix 4 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction Letter From Our CPO One of the things that inspired me to join Intel is the company’s long-standing commitment to positive global impact. We have a goal to create world-changing technology that improves the life of every person on the planet, but beyond that, we strive to do what’s right for people and the planet every day. At the center of these commitments are Intel’s more than 121,000 employees around the world. Our commitment and action related to responsible corporate practices are more than just important to the global workforce, they are table stakes. As Chief People Officer, my top job is to attract and retain the world’s best talent across every function—from the front lines in our factories to the engineers at the forefront of innovation. Intel’s leadership in corporate responsibility is what helps us continue to be an employer of choice. We invest in programs that create opportunities for people around the world as part of our 2030 RISE corporate responsibility goals. Not only does Intel continue to accelerate the integration of responsible, inclusive, and sustainable practices in our operations, supply chain, the industry, and beyond, we also enable our customers to meet their environ - mental and corporate responsibility commitments through our technology and the expertise of our employees. Our long history of leadership in corporate responsibility is a competitive advantage for our business. And our integrated approach helps us mitigate risks, build brand value, and identify new market opportunities to apply our technology to help address society’s most complex issues. Paramount to Intel’s success is our 2030 RISE strategy, which outlines our approach to corporate responsibility this decade, as well as the mea - surable goals we’ve set for ourselves to achieve in the next eight years. An important aspect to this strategy, outlined in the details of this report, is that our efforts are broader than just our own operations. This is not added work, it’s how we work. In 2021 we launched several industry-wide inclusion actions to enable our goals, including: • The Alliance for Global Inclusion was launched to drive collective impact in four key areas: leadership representation, inclusive language, inclusive product development, and improving STEM readiness in underserved communities. The alliance recently celebrated its first anniversary, and its membership has doubled since launch. • In its first year since launch, the Million Girls Moonshot reached 160,000 girls across the US via 21,000 after-school and summer STEM programs, and 612 new STEM partnerships. Our 2030 RISE goals also focus on fully harnessing the power of technology to solve increasingly complex and interconnected global challenges. For example, we are partnering with the technology industry and other stakeholders to revolutionize computing and expand digital readiness: • During 2021, Intel drove a wide range of programs, including AI for Citizens, AI for Youth, AI for Future Workforce, AI for Current Workforce, and Digital Readiness for Leaders for empowering citizens, students, professionals, and leaders to actively participate in and benefit from a digital economy. We scaled the programs by working with more than 20 countries and over 50 ministries and partners, impacting over 13,000 institutions and 1 million people globally. And inside of Intel, we believe that when every employee has a voice and a sense of belonging, Intel can be more innovative, agile, and competitive. An inclusive culture that welcomes all perspectives is critical for attracting, retaining, and progressing top talent who have a direct impact on innovation and on our products. Intel is committed to providing a work environment where employees from all backgrounds are valued, supported, respected, challenged, acknowledged, and rewarded so they can achieve their full potential. Recent examples include: • Through our 2030 goals, we are committed to further advancing the representation of women and underrep - resented minorities in leadership and technical positions at Intel, advancing accessibility, and embedding inclusive leadership practices in our culture and across our business. • Our global representation of technical women declined from 25.2% in 2020 to 24.3% in 2021, but more technical women—some 26,000—work at Intel than at any time in our recent history. To meet our goal of increasing representation of women in technical roles to 40%, we will implement targeted programs to increase the number of women hired for technician, engineering hardware, and software roles through sourcing, pipelining, and workforce development initiatives. To drive additional corporate-level focus, we have made this an Annual Performance Bonus goal for all employees in 2022. In addition, we set a goal that our hiring for technical entry-level roles is at least 30% women. • In 2022, we launched the Intel Hardship and Emergency Relief program, providing short term relief up to $2,500 (geo adjusted) to employees facing hardship due to global disasters and crises. We see the growing acknowledgment of the importance of corporate responsibility and sustainability, and our historic and ongoing leadership as an opportunity to fulfill our purpose to create world-changing technology that improves the life of every person on the planet. We couldn’t be prouder of what we’ve achieved over our long history of action in corporate responsibility and for what’s to come. Christy Pambianchi, Executive Vice President and Chief People Officer, Intel Corporation

Our Business Responsible Inclusive Sustainable Enabling Appendix 5 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction A Year in Review In May 2020, we laid out our 2030 RISE strategy and goals . Since then, we have made considerable progress on those goals. Below are some highlights from 2021 and early 2022: Investing in New Factories We announced significant manufacturing expansion plans, including the construction of two additional chip factories at our Arizona site, two more at a new Intel site in Ohio, and expansions in New Mexico, Oregon, and Malaysia. We estimate that our investments at each of the Ohio and Arizona sites will be $20 billion. We also announced plans to invest up to 80 billion euros to advance a world-class semiconductor ecosystem in the European Union . The European investment will include a new fab mega-site in Germany; an R&D hub in France; and R&D, manufacturing, and foundry services in Ireland, Italy, Poland, and Spain. Advancing Responsible Mobility and Automotive Safety Intel’s Mobileye technology has moved advanced driver- assistance systems from luxury add-on to standard equipment, and now helps keep drivers and passengers of more than 100 million vehicles safer. In December 2021, Intel announced its intention to take Mobileye public in the US in mid-2022 via an initial public offering of newly issued Mobileye stock. Progressing Toward a Net Positive Water Goal In 2021, we conserved 9.3 billion gallons of water internally and enabled restoration of approximately 2.3 billion gallons through investments in watershed restoration projects. These achievements advanced us toward our goal of net positive use, resulting in 99% (by volume) of global fresh water treated and returned to communities or the environment and restored through watershed projects during 2021. Through the end of 2021, we have achieved net positive water in three countries: the US, India, and Costa Rica. Building a More Inclusive Workforce In 2021, we continued our focus on career development and progression of diverse talent, and saw absolute numbers increase in all categories. Some of the numbers from 2020 to 2021 did not increase much as percentages or—in some cases—decreased, because of Intel’s unprecedented growth. We surpassed our milestone goal of reaching 1,375 women in senior leadership roles by 74, ending the year with 1,449 women in senior leadership roles across the globe. Responding to Humanitarian Crises When humanitarian crises or natural disasters strike, the Intel Foundation matches employees’ donations to support affected communities. In 2021, 16 relief campaigns raised nearly $2 million in donations and matches to aid recovery across 13 countries affected by wildfires, floods, winter storms, tornadoes, earthquakes, hurricanes, COVID-19, and more. More recently, the Foundation launched an employee donation campaign in response to the humanitarian crisis in Ukraine. Together, the Foundation and Intel employees raised over $2 million to support Ukraine aid efforts. Leading Supply Chain Responsibility and Impact As a result of our efforts to ensure the protection of human rights in the work place, our suppliers have returned $25 million in fees to their workers since 2014. In 2021, our progress also included expansion of our work in responsible minerals sourcing, and the achievement of $1.4 billion in annual spending with diverse-owned suppliers—a 10-fold increase compared to 2015. Contributing 848,000 Employee Volunteer Hours While the pandemic limited in-person gatherings, we continued to offer virtual volunteer opportunities for employees to give their time and talent to those in need. In 2021, our employees reported more than 848,000 volunteer hours globally in support of schools and non - profit organizations in our communities. Becoming a “Hybrid-First” Company Intel announced plans to become a “hybrid-first” company, allowing the majority of employees to split their time between working remotely and in the office. We also increased our employee compensation budget by about $1 billion in pay and $1.4 billion in stock.

Our Business Responsible Inclusive Sustainable Enabling Appendix 6 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction IRTI: Going Beyond COVID-19 We are extremely proud of how our team has responded throughout the COVID-19 pandemic. We have made changes and adopted measures to continue providing a safe environment for our employees and the operation of our manufacturing sites, and worked to mitigate risks to workers in our supply chain . Intel, the Intel Foundation, and Intel subsidiaries have donated $10 million to support local communities, including matching of employee and retiree donations. In 2020, we launched our Pandemic Response Technology Initiative (PRTI), a $50 million commitment to support essential workers, hard-hit businesses, and students of all ages with Intel-funded technology projects. Through PRTI, Intel partnered with more than 170 organizations on over 230 projects in multiple sectors. In 2021, we expanded the scope of the PRTI to include additional program areas, and renamed it the Intel RISE Technology Initiative (IRTI). With IRTI we are building deeper relationships with our customers and other partners in line with our corporate purpose and goal to create shared value. Since then, the IRTI has created a broader, purpose-driven platform for action with new dedicated workstreams for social equity and human rights, accessibility, and climate action. Among the many lessons learned over the past two years, is that technology is essential. In the IRTI’s first year, we invested $9.5 million to fund 63 projects across six areas that align with the Intel RISE pillars: accessibility; economic recovery; education; health and life sciences; social equity and human rights; and sustainability and climate. Intel differentiated technology was utilized in 67% of the projects to solve unique challenges that plague individuals and organiza - tions globally. Intel experts drive the success of IRTI projects, and we work with partners to identify issues and provide unique technology solutions to some of the world’s most complex challenges. Some of the most compelling projects funded by IRTI support diverse communities and strengthen our goal to support social equity and innovation. Intel has earmarked an additional $10 million for IRTI in 2022. Below are examples of IRTI projects. For more, see “ Collaborating for Technology Impact ” in the Enabling section of this report. Economic Recovery: Upskilling Program Supports Sports, Entertainment Workers. As we emerge from the pandemic, we’re faced with millions of workers who lost their jobs looking for new opportunities, including 9.3 million people in the sports and entertainment industries. We partnered with Microsoft and the Innovation Institute for Fan Experience to launch a joint initiative to address the skills gap and provide workers with a path for upward professional mobility. This program provides access to online curated content to help workers increase their competency, grow their professional skills, and enhance their capabilities for current and future roles. Ensuring an inclusive economic recovery requires opportunities for those hit hardest by job losses to develop essential skills. Health and Life Sciences: Integrating AI in Healthcare and Help Improve Patient Safety. Through IRTI, we’re implementing Intel technology to help improve patient safety and care. One project leverages Intel AI technology to establish zones around a patient’s bed, implementing virtual rails that, if crossed, trigger an alert for the medical team. A trained technician also monitors multiple patient rooms from a central station with this AI-assisted tech and alerts the care team to help prevent falls in the hospital setting. Built with Intel® OpenVINO™ technology to ensure enhanced performance on Intel AI hardware, the solution has been deployed at Intermountain Healthcare . We will continue to uncover and address both patient and provider needs and optimize Intel technology to maximize our potential impact within the medical industry. Sustainability and Climate: Establishing a Solar Community Hub in Brazil. To address environmental and educational needs of the Boa Esperança community in Brazil, we partnered with Dell and the Sustainable Amazon Foundation to establish a solar community hub. The solar-powered hub provides Internet access, technology, and services that benefit about 1,500 people. Included is access to digital literacy skills, business management training, and healthcare through telehealth connections. Also included is the Amazon Deforestation Alert System, which combines Inputs from local envi - ronmental monitors with AI technology to identify and predict occurrences of deforestation or environmental degradation in the Amazon region.

Our Business Responsible Inclusive Sustainable Enabling Appendix 7 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction Corporate Responsibility and Our 2030 RISE Strategy Intel’s purpose is to create world-changing technology that improves the life of every person on earth. Our continuing commitment to corporate responsibility is embedded in our purpose. That commitment—built on a strong foundation of transparency, governance, ethics, and respect for human rights—creates value for Intel and our stakeholders by helping us mitigate risks, reduce costs, build brand value, and identify new market opportunities to apply our technology to help address society’s most complex issues. Through our long-term focus on advancing transparency, setting ambitious goals, and integrating corporate responsibility across all aspects of our business, we have driven meaningful results and challenged ourselves to achieve higher levels of performance over time. The Intel Foundation amplifies the impact of our employees’ volunteer hours and contributions to our local communities and catalyzes action and collaboration with others on social impact initiatives. With our 2030 corporate responsibility “RISE” strategy and goals, we aim to create a more responsible , inclusive , and sustainable world, enabled through our technology and the expertise and passion of our employees. Our strategy not only raises the bar for ourselves and our supply chain, but also increases the scale and global impact of our work through new collaborations with our customers and a broad range of stakeholders. Our aim is to fully harness the power of technology to solve the increasingly complex and interconnected global challenges over the next decade and beyond. We know that acting alone, Intel cannot achieve the broad, societal impact we aspire to. Since we announced our new strategy, we have been inspired by the many ways our employees have driven progress on our goals in the face of a challenging external environment, and by the positive feedback and increased interest from stakeholders to work together to accelerate global impact. Responsible Inclusive Sustainable Enabling Global Challenges Technology Industry Initiatives Intel Operational and Supply Chain Goals Responsible Lead in advancing safety, wellness, and responsible business practices across our global manufacturing operations, our value chain, and beyond Inclusive Advance diversity and inclusion across our global workforce and industry, and expand opportunities for others through technology, inclusion, and digital readiness initiatives Sustainable Be a global leader in sustainability and enable our customers and others to reduce their environmental impact through our actions and technology Enabling Through innovative technology and the expertise and passion of our employees we enable positive change within Intel, across our industry, and beyond In developing our 2030 RISE strategy and goals, we leveraged external frameworks such as the United Nations Sustainable Development Goals symbolized above. For more, see “ United Nations Sustainable Development Goals ” in the Appendix.

Our Business Responsible Inclusive Sustainable Enabling Appendix 8 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction 2030 RISE Operational and Supply Chain Goals This table outlines our 2030 operational and supply chain goals, including progress made in 2021. These goals are designed to continue to raise the bar for ourselves and to deliver greater value for our customers by helping them reach their corporate responsibility goals and targets. Click on each heading to learn more. 2030 Goal Progress in 2021 Responsible Employee Health, Safety, and Wellness. Ensure that more than 90% of our employees believe that Intel has a strong safety culture and that 50% participate in our global wellness program. During 2021, 87% of surveyed employees agreed with our “safety is a value” metrics. 27% of global Intel employees participated in wellness programs in 2021. Supply Chain Human Rights. Scale our supplier responsibility programs to ensure respect for human rights across 100% of our tier 1 contracted suppliers and higher risk tier 2 suppliers. 1 We reached 24% completion of our Tier 1 contracted suppliers, and remain on track to 100% completion by 2030. Inclusive Workforce Inclusion. • Double the number of women and underrepresented minorities in senior leadership roles. • Exceed 40% representation of women in technical positions. • Advance accessibility and increase the percentage of employees who self-identify as having a disability to 10% of our workforce. • Ensure that inclusive leadership practices and accountability are embedded in our culture globally by creating and adopting an inclusive leader certification program. We surpassed our milestone goal of reaching 1,375 women in leadership roles, ending 2021 with 1,449 women in senior leadership roles. Our US underrepresented minority senior leaders also increased, from 384 to 444. We set a milestone goal to increase representation of Black/African American employees in senior, director, and executive roles by 10% in the US. We exceeded this milestone, increasing representation by 11%. As of December 2021, 3.8% of Intel’s US workforce self-identified as having a disability. Supplier Diversity. Increase global annual spending with diverse suppliers 2 by 100% to reach $2 billion in annual spending by 2030. In 2021, our annual spending with diverse suppliers reached $1.4 billion, a 10-fold increase compared to 2015, when we launched our diverse supplier program. We have made strong progress toward our 2030 goal and our three milestones focused on women-, minority-, and US Black-owned suppliers. Sustainable Climate and Energy. • Achieve 100% renewable electricity use across our global manufacturing operations. • Conserve 4 billion kWh of electricity. • Drive a 10% reduction in our absolute Scope 1 and 2 GHG emissions as we grow, informed by climate science. • Increase product energy efficiency 10x for Intel client and server microprocessors to reduce our Scope 3 emissions. In 2021, we invested in projects that enabled us to conserve approximately 162 million kWh of electricity. We have conserved a cumulative total of 486 million kWh of electricity since the baseline date. While our absolute Scope 1 and 2 greenhouse gas (GHG) emissions increased 14% from our 2019 baseline, our total GHG emissions (including Scopes 1, 2, and 3) decreased 2% from last year. Net Positive Water. Achieve net positive water use by conserving 60 billion gallons of water and funding external water restoration projects. During 2021, we conserved 9.3 billion gallons of water and conserved 16.4 billion gallons cumulatively from the 2020 baseline. Intel projects also restored 2.3 billion gallons of water during 2021 and 3.6 billion gallons cumulatively from the 2020 baseline, to our watersheds. Zero Waste 3 /Circular Economy. Achieve zero total waste to landfill and implement circular economy strategies for 60% of our manufacturing waste streams in partnership with our suppliers. We sent 5% total waste to landfill and implemented circular strategies for 65% of our manufacturing waste. Enabling Community Impact. Deliver 10 million volunteer hours to improve our local communities, including an increase in skills-based volunteerism. During 2021, our employees donated 848,000 hours of service in our local communities. 1 Tier 1 suppliers are companies from which Intel makes direct purchases (more than 9,000 suppliers in 2021). Tier 1 contracted suppliers are subject to formal agreements with Intel based on the products and services provided and spends (approximately 1,500 at the beginning of 2021). Tier 2 suppliers are companies from which Intel’s tier 1 suppliers make direct purchases. 2 We recognize diverse suppliers as businesses that are 51% owned and operated by at least one of the following: women; minorities as defined by the country or region where the business was established; veterans/ service-disabled veterans; persons who are lesbian, gay, bisexual, or transgender; or persons who are disabled. While Intel recognizes these categories, they may vary in accordance with local law. 3 Intel defines zero waste as less than 1% sent to landfill.

Our Business Responsible Inclusive Sustainable Enabling Appendix 9 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction Technology Industry Initiatives Building on the foundation of our operational and supply chain goals, we will work with the technology ecosystem to accelerate improvements across our industry. We know that we can go faster and be more effective working together. Click on the headers below for more details on our initiatives and progress. Responsible Inclusive Sustainable Responsible minerals. Expand our efforts beyond conflict minerals 1 to cover all minerals used in semiconductor manufacturing and apply the learnings to lead our industry in creating new sourcing standards. In 2021, we sent our first expanded minerals survey for aluminum, copper, nickel, and silver to suppliers contributing these materials in our Intel-manufactured microprocessors. This is an important step in our RISE strategy, as we begin mapping our supply chains for our highest priority minerals. Although sourcing of these minerals is not yet widely reported, we received a response from 89% of relevant suppliers. Additionally, we continue to partner with industry associations to ensure standards are in place to enable our ultimate goal of responsible sourcing for all the minerals in our supply chain. We will continue to identify the highest priority minerals in pursuit of our 2030 RISE Goals. Responsible mobility. Collaborate with our industry and ecosystem partners to advance the adoption of technology-neutral safety standards to reduce traffic accidents globally. Intel’s Mobileye business is a global leader in driving assistance and self-driving solutions. The company’s product portfolio employs a broad set of technologies covering computer vision and machine learning-based sensing, data analysis, for advanced driver assistance systems and autonomous vehicles (AVs). Mobileye technology helps keep the drivers and passengers of more than 100 million vehicles safer today. Intel’s Responsibility- Sensitive Safety (RSS) has become a leading model for global AV safety frameworks. Numerous standards bodies are beginning to include RSS in standards development activities. Inclusion Index. Drive full inclusion and accessibility across the technology industry by creating and implementing a Global Inclusion Index with common metrics to advance progress. In 2021, we launched the Alliance for Global Inclusion, a coalition focused on creating an inclusion index and working to collectively advance progress in four critical areas: leadership representation, inclusive language, inclusive product development, and STEM readiness in under- resourced communities. Since its launch, the Alliance has more than doubled in size, from five to 12 members, and has made meaningful progress on creating an Inclusion Index to serve as a benchmark for companies to track diversity and inclusion improvements, provide information on current best practices, and highlight opportunities to improve outcomes across industries. In December 2021, the Alliance published suggested guidance to secure diverse representation on boards of directors. Inclusive pipeline. Expand the inclusive pipeline of talent for our industry through innovative global education initiatives and STEM programs for girls and underrepresented groups. Building a diverse and inclusive workforce and industry requires continued collective investments and innovative approaches to increasing the diversity of the talent pipeline and expanding access to the education resources needed to pursue careers in our field. In 2021, we scaled a number of programs and collaborations with customers, governments, and other stakeholders to bridge the gender gap in STEM fields, including our work with Women in AI, a nonprofit working to empower women and minorities as AI and data experts, innovators, and leaders. In early 2021, we also pledged $5 million to North Carolina Central University, a historically Black college or university, to create a tech law and policy center. Sustainable manufacturing . Create a collective approach to reducing emissions for the semiconductor manufacturing industry and increase the use of technology to reduce climate impact in global manufacturing. In the 1990s, Intel led an initiative to eliminate the use of Class 1 ozone-depleting substances in semiconductor manufacturing. Our 2030 climate goals are informed by climate science, but due to our (and our industry’s) early emissions reductions and demand growth for semiconductors, it remains challenging to gain formal approval for a target under the existing methodology of the Science-Based Targets Initiative (SBTi). In 2021, we continued to work with industry stakeholders to assess the potential for the development of a sector-specific approach to setting science-based GHG emissions-reduction targets for the semiconductor manufacturing industry. The goal is to expand the number of companies in our sector that set approved science-based targets. Sustainable chemistry. Enable greener and circular chemistry strategies across the technology industry value chain by transforming chemical footprint methodology. In 2021, Intel continued efforts to refine the chemical footprint methodology for use in evaluation of the chemical supply chain, as well as to integrate the methodology into the chemical supply chain to enable better assessment of full lifecycle impact. In addition, we continued to provide webinars to further the promotion of the chemical footprint methodology as a technology industry initiative. Sustainable chemistry baselines were completed for the current manufacturing technologies, as well as for the year 2020. We completed an initial analysis of the current chemical uses and those identified as the highest impact chemistries have been included within a set of priority projects to further scope and disposition. 1 Conflict minerals, as defined by the US Securities and Exchange Commission (SEC), is a broad term that means tin, tantalum, tungsten, and gold (3TG), regardless of whether these minerals finance conflict in the Democratic Republic of the Congo (DRC) or adjoining countries.

Our Business Responsible Inclusive Sustainable Enabling Appendix 10 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction 2030 Global Challenges We have identified key areas where we believe we can best leverage our manufacturing expertise, unique position within the technology ecosystem, and the wide range of technol - ogy we enable to bring others together to accelerate action on key global challenges to save and enrich lives. These areas include health and well-being, inclusion and economic opportunity, and climate change. Click on the headers below for more detail on our approach and progress. Responsible Inclusive Sustainable Revolutionize how technology will improve health and safety We will apply our expertise, resources, and technology to enable others to harness the power of technology to improve health, safety, and wellness—including in the areas of healthcare and life sciences, manufacturing, and transportation. In 2021, we progressed on our global challenge to apply technology to advance healthcare and safety on multiple fronts. Included were collaborations aimed at facilitating the analysis of data from COVID-19 patients to determine the origin and expected severity of various coronavirus strains, and an edge device that provides information and tools for healthcare workers responsible for vaccine distribution. Make technology fully inclusive and expand digital readiness We will advance inclusion and accessibility for millions of people who currently do not have the technology skills or resources needed to access educational, economic, and community resources in our increasingly digital economy. During 2021, we drove a wide range of digital readiness aimed at empowering non-technical audiences, students, professionals, and leaders with the skills, tools, and opportunities needed to use technology effectively and benefit from the digital economy. We scaled the programs by working with more than 20 countries, 50 education ministries, and other partners, impacting over 13,000 institutions and 1 million people globally. Achieve carbon neutral computing to address climate change While we continue to reduce our own global manufacturing climate footprint, we will also take actions with others to collectively expand the technology “handprint”— transforming product energy use and design and applying technology to reduce computing-related climate impacts across the rest of the global economy. Progress in 2021 included advances across sustainability in manufacturing, use phase, and asset retirement for client PCs. These include advances in specifications, further adoption of specifications, and advances in the Intel® NUC systems, reference platforms, and OEM partnerships. In the data center, progress was made across both the “use phase” and the “create phase,” with specific advances around cooling solutions, product roadmap, and industry engagement with the Open Compute Project. We continue to engage in a range of organizations to build a supportive policy environment for private sector leadership on climate change, and advocate to innovate and invest in climate solutions through our engagement in environmental policy think tanks such as the Center for Climate Change and Energy Solutions. Enabling

Our Business Responsible Inclusive Sustainable Enabling Appendix 11 intel.com/responsibility 2021-22 Corporate Responsibility Report Introduction Awards and Recognitions Third-party ratings and rankings give us valuable feedback on our programs and practices, and help drive continuous improvement over time. Below is a selection of the corporate responsibility-related awards and recognitions that Intel received in 2021 and in the first quarter of 2022. 3BL Media. 100 Best Corporate Citizens AISES. Top 50 Workplaces for Indigenous STEM Professionals American Association of People with Disabilities and Disability:IN. Disability Equality Index As You Sow. Clean200 AnitaB.org. America’s Top Corporations for Women Technologists Barron’s. #1 Most Sustainable Company Bloomberg. Bloomberg Gender-Equality Index CDP. “A” Water Security Rating, “A” Climate Change Rating, Supplier Engagement Leadership Rating Center for Political Accountability. CPA-Zicklin Index of Corporate Political Disclosure and Accountability—Trendsetter Company Center for Resource Solutions. Renewable Energy Markets Asia Award Corporate Knights. Global 100 Most Sustainable Corporate Citizens DisabilityIN. ERG of the Year Dow Jones Sustainability Index. North America Index Ethisphere Institute. World’s Most Ethical Companies Forbes. World’s Best Employers, America’s Best Employers for Women, America’s Best Employers for Diversity, America’s Best Employers for New Grads, and America’s Best Employers for Veterans Fortune. Top 20 Fortune 500 Companies on Diversity and Inclusion FTSE Group. FTSE4Good Index 1 Gartner. Supply Chain Top 25 Hispanic Association of Corporate Responsibility. Corporate Inclusion Index 5-Star Rating for Governance Human Rights Campaign. Corporate Equality Index ISS. 1 rating in both Environment & Social QualityScore 2 JUST Capital and Forbes. JUST 100 LATINA Style 50. Top 50 Best Companies for Latinas to Work in the US Minority Engineer. Top 50 Employers MSCI. World ESG Leaders Index 3 National Business Inclusion Consortium. Best-of-the-Best Corporations for Inclusion Newsweek. America’s Most Responsible Companies Religious Freedom & Business Foundation. Corporate Religious Equity, Diversity and Inclusion Index RepTrak. 2021 Global RepTrak® 100 US Environmental Protection Agency. #3 Ranking on Green Power Partnership National Top 100 Wall Street Journal. Management Top 250 Women’s Business Enterprise National Council. Top Corporations for Women’s Business Enterprises WE Connect International. Top 10 Global Champions for Supplier Diversity Inclusion Women Engineer Magazine. Top 50 Employers – Reader’s Choice Working Mother. 100 Best Companies for Multicultural Women 1 FTSE Russell (the trading name of FTSE International Limited and Frank Russell Company) confirms that Intel Corporation has been independently assessed according to the FTSE4Good criteria, and has satisfied the requirements to become a constituent of the FTSE4Good Index Series. Created by the global index provider FTSE Russell, the FTSE4Good Index Series is designed to measure the performance of companies demonstrating strong Environmental, Social and Governance (ESG) practices. The FTSE4Good indices are used by a wide variety of market participants to create and assess responsible investment funds and other products. 2 Scores as of end of year 2021. 3 The inclusion of Intel Corporation is any MSCI Index, and the use of MSCI logos, trademarks, service marks or index names herein, do not constitute a sponsorship, endorsement or promotion of Intel Corporation by MSCI or any of its affiliates. The MSCI Indexes are the exclusive property of MSCI. MSCI and the MSCI Index names and logos are trademarks or service marks of MSCI or its affiliates.

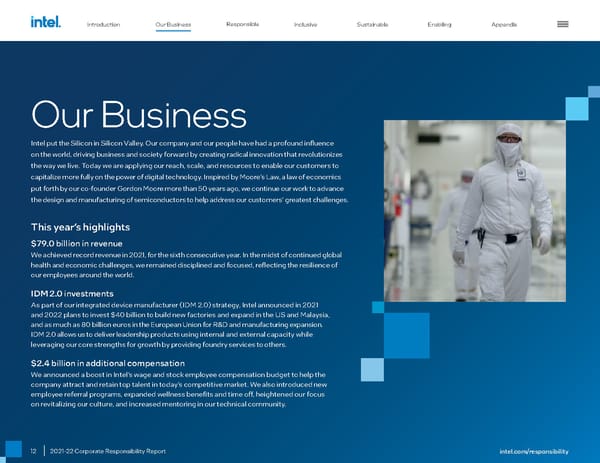

12 2021-22 Corporate Responsibility Report Our Business Intel put the Silicon in Silicon Valley. Our company and our people have had a profound influence on the world, driving business and society forward by creating radical innovation that revolutionizes the way we live. Today we are applying our reach, scale, and resources to enable our customers to capitalize more fully on the power of digital technology. Inspired by Moore’s Law, a law of economics put forth by our co-founder Gordon Moore more than 50 years ago, we continue our work to advance the design and manufacturing of semiconductors to help address our customers’ greatest challenges. This year’s highlights $79.0 billion in revenue We achieved record revenue in 2021, for the sixth consecutive year. In the midst of continued global health and economic challenges, we remained disciplined and focused, reflecting the resilience of our employees around the world. IDM 2.0 investments As part of our integrated device manufacturer (IDM 2.0) strategy, Intel announced in 2021 and 2022 plans to invest $40 billion to build new factories and expand in the US and Malaysia, and as much as 80 billion euros in the European Union for R&D and manufacturing expansion. IDM 2.0 allows us to deliver leadership products using internal and external capacity while leveraging our core strengths for growth by providing foundry services to others. $2.4 billion in additional compensation We announced a boost in Intel’s wage and stock employee compensation budget to help the company attract and retain top talent in today’s competitive market. We also introduced new employee referral programs, expanded wellness benefits and time off, heightened our focus on revitalizing our culture, and increased mentoring in our technical community. Introduction Responsible Inclusive Sustainable Enabling Appendix Our Business intel.com/responsibility

13 intel.com/responsibility 2021-22 Corporate Responsibility Report The world is becoming more digital, and computing more pervasive. Semiconductors are the underlying technology powering the digitization of everything, which is being accelerated by four superpowers: ubiquitous compute, cloud-to-edge infrastructure, pervasive connectivity, and artificial intelligence (AI). Together these superpowers reinforce and amplify one another, and will exponentially increase the world ’ s need for computing by packing even more processing capability onto ever-smaller microchips. We intend to lead the industry by harnessing these superpowers for our customers ’ growth and our own. Our 2021 revenue of $79 billion was up year over year, with our Client Computing Group revenue up 1% and our Data Center Group revenue down 1%, both amid the effects of industry-wide supply constraints. Our Internet of Things Group and Mobileye both achieved strong results on higher demand amid recovery from the economic impacts of COVID-19. Our CEO Pat Gelsinger joined Intel on February 21, 2021. He returns to Intel, where he previously spent 30 years of his career, learned at the feet of Intel ’ s founders, and served as our first Chief Technology Officer. Company Profile On March 23, 2021, we announced our “ IDM 2.0 ” strategy, which combines our internal factory network, strategic use of external foundries, and our new Intel Foundry Services (IFS) business. With IFS, we intend to build a world-class foundry business to meet the growing global demand for semiconductors. We plan to differentiate our foundry offerings from those of others through a combination of leading-edge packaging and process technology, committed capacity in the US and Europe available to customers globally, and a world- class intellectual property (IP) portfolio. We believe our IDM 2.0 strategy will enable us to deliver leading process technology and products to meet growing demand, while providing superior capacity and supply resilience and an advantageous cost structure. Delivering on our IDM 2.0 strategy and growth ambitions requires attracting, developing, and retaining top talent. We are committed to creating an inclusive workplace where the world ’ s best engineers and technologists can fulfill their dreams and create technology that improves the life of every person on the planet. We are an industry leader and a catalyst for technology innovation and products that revolutionize the way we live. We are committed to harnessing the breadth and scale of our reach to have a positive effect on business, society, and the planet. Our purpose is to create world-changing technology that improves the life of every person on the planet. Our priorities The sections of this Company Profile derived from our 2021 Annual Report on Form 10-K speak as of January 27, 2022, unless another date is indicated. Beginning with our first quarter 2022 results, we report under six business units, following an update to segment reporting that aligns with our organizational structure and business strategy. More information regarding this reporting structure, including recasts of our historical financial statements for the past three years, are available through our filings with the Securities and Exchange Commission. Introduction Responsible Inclusive Sustainable Enabling Appendix Our Business

14 intel.com/responsibility 2021-22 Corporate Responsibility Report How We Organize Our Business % Intel Revenue Key Markets and Products Client Computing Group. Includes platforms designed for end-user form factors, focusing on higher growth segments of 2-in-1, thin-and- light, commercial and gaming, and growing adjacencies such as connectivity and graphics. Data Center Group. Includes workload- optimized platforms and related products designed for cloud service providers, enterprise and government, and communications service providers market segments. Internet of Things Group. Includes high- performance compute solutions for targeted verticals and embedded applications in market segments such as retail, industrial, and healthcare. Mobileye. Includes comprehensive solutions required for autonomous driving, including compute platforms, computer vision, and machine learning- based sensing, mapping and localization, driving policy, and active sensors in development, utilized for both Robotaxi and consumer-level autonomy. Non-Volatile Memory Solutions Group. Includes memory and storage products like Intel® 3D NAND technology, primarily used in SSDs. 1 Programmable Solutions Group. Includes programmable semiconductors, primarily FPGAs and structured ASICs, and related products for communications, cloud and enterprise, and embedded market segments. Our Customers Our portfolio of products and capabilities positions us well to play a larger role in our customers ’ success. Beyond the CPU, we are delivering a growing family of xPU products (graphics processing units [GPUs], accelerators, and field-programmable gate arrays [FPGAs]) to help meet our customers ’ increasingly diverse computing needs. We sell our products primarily to original equipment manufacturers (OEMs), original design manufacturers (ODMs), and cloud service providers. In addition, our customers include other manufacturers and service providers, such as industrial and communication equipment manufacturers and other cloud service providers, who buy our products through distributor, reseller, retail, and OEM channels throughout the world. Our worldwide reseller sales channel consists of thousands of indirect customers— systems builders that purchase Intel® processors and other products from our distributors. For additional information, refer to the 2021 Intel Annual Report on Form 10-K . Our Competitors We face intense competition across our product portfolio from companies offering platform products; accelerator products such as GPUs; other accelerator products such as application-specific integrated circuits (ASICs), application-specific standard products, and FPGAs; memory and storage products; connectivity and networking products; and other semiconductor products. We also compete with internally developed semiconductors from OEMs, cloud service providers, and others, some of whom are customers. For additional information, refer to the 2021 Intel Annual Report on Form 10-K . Our Products Our product offerings provided end-to-end solutions, scaling from edge computing 2 to 5G networks, the cloud, and the emerging fields of AI and autonomous driving. Products, such as our gaming CPUs, may be sold directly to end customers, or they may be further integrated by our customers into end products such as notebooks and storage servers. Combining some of these products— for example, integrating FPGAs and memory with Intel® Xeon® processors in a data center solution—enables incremental synergistic value and performance. End-to-End Product Portfolio In 2021, we launched new products, such as the 12th Gen Intel® Core™ processors (previously referred to as Alder Lake), the first on the Intel 7 process, with real-world performance for enthusiast gamers and professional creators. In addition, we launched the 3rd Gen Intel® Xeon® Scalable processor (Ice Lake), which boasts up to 40 cores and delivers a significant increase in performance, on average, compared to the previous generation. These Intel Xeon processors include a set of built-in security features, cryptographic acceleration, and AI. For more information, refer to the 2021 Intel Annual Report on Form 10-K . 1 In October 2020, we signed an agreement with SK hynix to divest our NAND memory business. The NAND memory business makes up our NSG segment. The transaction will occur over two closings, the first of which was completed on December 29, 2021, subsequent to our fiscal 2021 year end. 2 Placing resources to move, store, and process data closer to where data is generated and consumed. Introduction Responsible Inclusive Sustainable Enabling Appendix Our Business

15 intel.com/responsibility 2021-22 Corporate Responsibility Report Intel® W i-Fi 6 Solutions Au tonomous Driving Client Connectivity Cloud Computing Client Computing AI and Analytic s 5G Netw ork IoT End-to-End Product Portfolio We also introduced a broad, data-centric portfolio for 5G network infrastructure, including a System-on-a-Chip (SoC) for wireless base stations, structured ASICs for 5G network acceleration, and a 5G network-optimized Ethernet Network Interface Controller (NIC). In addition, we revealed the brand for our upcoming consumer high- performance graphics: Intel® Arc™ graphics. The Intel Arc brand will cover hardware, software, and services, and will span multiple hardware generations, with the first generation shipping to OEMs in early 2022. On December 29, 2021, we completed the first closing of the divestiture of our NAND memory business to SK hynix, Inc. We intend to invest transaction proceeds to deliver leadership products and advance our long-term growth priorities. For more information about our products, read our 2021 Intel Annual Report on Form 10-K . Product Responsibility and Impact We strive to minimize the environmental impact of our products at all phases in their life cycle: development, production, use, and ultimate disposal. We also consider accessibility during product development, and design products that are accessible to a wider range of users— including people with disabilities. For more information, see “ Product Ecology ” and “ Product Energy Efficiency ” in the Sustainable section and “ Making Technology Fully Inclusive ” in the Inclusive section of this report. We recognize that innovation, growth, and the success of our business and our industry depend on individuals’ trust in their use of technology and in the responsible, protected collection and processing of their data. We also do not tolerate our products being used to violate human rights. For more detail, see “ Respecting Human Rights ” later in this section of the report. Examples of innovative applications of our technology and collaborations with our customers and other stake - holders are highlighted in the RISE global challenge discussions at the end of each section of this report. From Sand to Silicon The transistor is the engine that powers every Intel processor. To build a modern computer chip, our engineers place billions of these tiny switches into an area no larger than a fingernail. Watch the video to see how Intel builds the world’s most complex devices. Introduction Responsible Inclusive Sustainable Enabling Appendix Our Business

16 intel.com/responsibility 2021-22 Corporate Responsibility Report Cybersecurity and Product Security At Intel, security comes first both in the way we work and in what we work on. Our culture and practices guide everything we build, with the goal of delivering the highest performance and optimal protections. As with previous reports, the 2021 Intel Product Security Report demonstrates our Security First Pledge and our endless efforts to proactively seek out and mitigate security issues. We recognize that massive shifts in how we live, work, connect, and communicate increase the need for technologies that people trust, built on a foundation of security. We prioritize security in two ways: in the way we work, through our culture and practices aimed at delivering high performance and protections in everything we build; and in what we work on, through our relentless pursuit of security-driven innovations that help our customers tackle today’s toughest challenges. Security Technologies Strategy. To meet the challeng - es of computing that spans cloud to edge and devices, security must be a continual focus. We understand the complexity that results from the ongoing computing transformation. We have deep experience in enabling security, as well as a comprehensive suite of technolo - gies that help secure entire systems and deliver defense in depth. We engineer security solutions to meet specific challenges centered around three key priorities: foun - dational security to help systems come up as expected, workload protection to improve security of data in use, and software reliability to build in hardware-based pro - tections against common software threats. Comprehensive Security Practices. Through the Security Development Lifecycle (SDL), we apply security and privacy principles at six phases, from planning through release and post-deployment. SDL covers Intel® hardware, firmware, and software products. In release and post-deployment, an essential part of our product support is ongoing security research and mitigations. In 2021 we expanded our Bug Bounty Program , which incentivizes security researchers to report vulnerabilities in Intel products. We began rewarding researchers with bonus multipliers for findings in specific areas of interest, leading to mitiga - tions and improved security in an array of products. We also work across the industry to improve security; when a vulnerability is identified, we work with affected partners to develop and release mitigations. We align on disclosure to minimize potential threats while we work to address the vulnerability. Security Research. Continuous improvement is made through investments in offensive research on the security of our products. We have a dedicated team of experts who continually research and test products internally. Internal security research for 2021 accounts for 50% of the issues addressed and an additional 43% were reported through Intel ’ s Bug Bounty Program. This work is scaled through practices that include red teaming and hackathons. We use what we learn to improve our products and practices, and we collaborate with world- class industry partners, global security researchers, and academic institutions to advance security research across the industry. For more information, visit Product Security at Intel or read our Intel Product Security 2021 Report . In addition, our Cyber Security Inside podcast series provides insights on cybersecurity-related trends to information security and industry executives. Planning and assessment Architecture Design 1 4 2 3 5 6 Implementation Security validation Release/ post deployment Security Development Lifecycle Securing Intel’s Supply Chain Our sourcing and manufacturing practices are built on decades of experience and aligned to industry-leading processes. Our supply chain security program leverages this expertise and has embedded security controls through - out the vendor lifecycle. Intel’s supply chain security risk management program is derived from standard industry risk management frameworks such as NIST and ISO and provides security assurance through the integration of security controls throughout sourcing and supplier management practices. Security expectations begin at supplier selection. Expectations are then reinforced through contractual security terms and conditions, recurring information security audits, ongoing security key performance indicators, and recurrent required training. Our Cybersecurity Supply Chain Risk Management (C-SCRM) program executes hundreds of information security supplier audits annually and is aligned to standard industry information security management frameworks, including ISO 27001 and NIST 800-30. Additionally, we continuously monitor the cybersecurity posture of our suppliers through a third-party security ratings platform and have a dedicated third-party cyber incident response team. We are also committed to advancing evolving supply chain security standards and policies by working with governments, organizations, and industries. Visit our Sourcing and Manufacturing Security site to learn more. Introduction Responsible Inclusive Sustainable Enabling Appendix Our Business

17 intel.com/responsibility 2021-22 Corporate Responsibility Report Our Capital We believe that our integrated approach to financial matters, corporate governance, and corporate responsibility drives increased accountability, improves decision making, and ultimately creates long-term value. In line with the International Integrated Reporting Council’s International framework and six capitals concept, we have outlined how we deploy capital to execute our strategy in a way that seeks to reflect our corporate values, help our customers succeed, and create value for our stakeholders. Each of our six forms of capital, summarized below, plays a critical role in our long-term value creation. For more detail, see the 2021 Intel Annual Report on Form 10-K . Financial Capital. Our financial capital allocation strategy focuses on building stockholder value. Our first allocation priority is to invest in R&D and capital spending to capitalize on the opportunity presented by the world’s demand for semiconductors. Our second allocation priority is to invest in and acquire companies that complement our strategic objectives. Our third allocation priority is to return cash to stockholders. We achieve this through our dividend and share repurchase programs. As we invest in our IDM 2.0 strategy, our allocation priorities will shift more heavily toward investing in the business and away from share repurchases. We expect our future stock repurchases to be significantly below our levels from the last few years. During 2021, we paid $5.6 billion in dividends similar to same amount paid in 2020. We have paid a cash dividend in each of the past 117 quarters. For additional 2021 financial information, see the 2021 Intel Annual Report on Form 10-K . Our Capital We deploy various forms of capital to execute our strategy in a way that seeks to reflect our corporate values, help our customers succeed, and create value for our stakeholders. Capital Strategy Value Financial Leverage financial capital to invest in ourselves and drive our IDM 2.0 strategy, supplement and strengthen our capabilities through acquisitions, and provide returns to stockholders. We strategically invest financial capital to create long-term value and provide returns to our stockholders. Intellectual Invest significantly in R&D and IP to enable us to deliver on our accelerated process technology roadmap, introduce leading x86 and xPU products, and develop new businesses and capabilities. We develop IP to enable next-generation products, create synergies across our businesses, expand into new markets, and establish and support our brands. Manufacturing Aligned with our IDM 2.0 strategy, invest to efficiently build manufacturing capacity to address growing global demand for semiconductors. Our geographically balanced manufacturing scope and scale enable us to provide our customers and consumers with a broad range of leading-edge products. Human Continue to build a diverse, inclusive, and safe work environment to attract, develop, and retain top talent needed to build transformative products. Our talented employees enable the development of solutions and enhance the intellectual and man - ufacturing capital critical to helping our customers win the technology inflections of the future. Social and Relationship Build trusted relationships for both Intel and our stakeholders, including employees, suppliers, customers, local communities, and governments. We collaborate with stakeholders on programs to empower underserved communities through education and technology, and on initiatives to advance accountability and capabilities across our global supply chain, including accountability for the respect of human rights. Natural Continually strive to reduce our environmental footprint through efficient and responsible use of natural resources and materials used to create our products. With our proactive efforts, we seek to mitigate climate and water impacts, achieve efficiencies, and lower costs, and position us to respond to the expectations of our stakeholders. Intel Capital. For over three decades, Intel Capital, our global invest - ment organization, has invested more than $20 billion to back standout, early-stage technology startups across four key areas: silicon, frontier, devices, and cloud. Intel Capital-backed companies have produced more than 700 IPOs and exits, and created more than $100 billion in market value in the past 10 years. Learn more . 2020 2021 $72.9 $74.7 $24.4 $22.2 $5.10 $5.47 Revenue GAAP $B Non-GAAP $B GAAP $B Non-GAAP $B GAAP $B Non-GAAP $B 2020 2021 Operating Income 2020 2021 Diluted EPS $77.9 $79.0 $23.7 $19.5 $4.94 $4.86 The preparation of consolidated financial statements is in conformity with US Generally Accepted Accounting Principles (GAAP). We have included key metrics that we use to measure our business, some of which are non-GAAP measures. See “ Non-GAAP Financial Measures ” in the Appendix for a reconciliation of non-GAAP operating income and non-GAAP EPS to comparable GAAP measures. Introduction Responsible Inclusive Sustainable Enabling Appendix Our Business

18 intel.com/responsibility 2021-22 Corporate Responsibility Report Intellectual Capital. R&D investment is critical to enable us to deliver on our accelerated process technology roadmap, introduce leading products, and develop new businesses and capabilities. Our objective with each new generation of products is to improve user experiences and value through advances in perfor - mance, power, cost, connectivity, security, form factor, and other features. We also focus on reducing our design complexity, reusing IP, and increasing ecosystem collaboration to improve our efficiency. We seek to protect our R&D efforts through our IP rights and may augment R&D initiatives by acquiring or investing in companies, entering into R&D agreements, and directly purchasing or licensing technology. We own and develop significant IP and related IP rights around the world that support our products, services, R&D, and other activities and assets. Our IP portfolio includes patents, copyrights, trade secrets, trademarks, mask works, and other rights. Intel ranked #6 in patents granted for 2021 by the US Patents and Trademark Office, our seventh straight year in the top 10. 3 For additional information regarding our IP rights, see the 2021 Intel Annual Report on Form 10-K . Manufacturing Capital. Our IDM 2.0 strategy allows us to deliver leadership products through the use of internal and external capacity while leveraging our core strengths for growth via providing foundry services to others. IDM 2.0 combines three factors. First, we will continue to build the majority of our products in Intel fabs. Second, we expect our use of third-party foundry capacity to grow and to include manufacturing for a range of modular tiles on advanced process tech - nologies. Third, we intend to build a world-class foundry business with Intel Foundry Services (IFS), which will combine leading-edge process and packaging technology, committed capacity in the US and Europe, and a world-class IP portfolio for customers, including x86 cores. Our work to advance the design and manufacturing of semiconductors enables new products with higher performance while balancing power efficiency, cost, and size. We continue to work across our supply chain to minimize disruptions, improve productivity, and increase overall capacity and output to meet customer expectations. In 2021, our factories performed well in a highly dynamic environment, where we adapted to rapid demand shifts and industry component shortages affecting us and our customers. 3 Source: IFI CLAIMS Patent Services , as of January 5, 2022. As of our fiscal 2021 year-end, we had 10 manufacturing sites—six wafer fabrication and four assembly/test facilities. The map above shows these factory sites and the countries where we had a significant R&D and/or sales presence. The Dalian factory was sold subsequent to year-end as part of the first closing of the divestiture of our NAND Memory business. The majority of our logic wafer manufacturing is conducted in the US. To accelerate our IDM 2.0 strategy, in March 2021 we announced plans to invest $20 billion to build two additional fabs in Arizona , which we broke ground on in September 2021. In 2021, we also announced an approximately $10.5 billion investment to equip our Rio Rancho, New Mexico and Malaysia sites for advanced packaging manufacturing. In 2022, we announced plans to invest more than $20 billion in the construction of two leading-edge fabs at a new Intel site in Ohio , and up to 80 billion euros to advance a world-class semiconductor ecosystem in the European Union . The European investment will include a new fab mega-site in Germany; an R&D hub in France; and R&D, manufacturing, and foundry services in Ireland, Italy, Poland, and Spain. Oregon Arizona New Mexico Costa Rica Ireland Israel Malaysia Chengdu Vietnam Dalian Wafer Fabs Assembly and Test Intel Presence Intel Worldwide Headquarters Santa Clara, California “Intel has been a leader in sustainability results for decades. With leadership comes responsibility. We’re now raising the bar and entering an exciting era to achieve net-zero greenhouse gas emissions across our operations by 2040. This will require significant innovation and investment, but we are committed to do what it takes and will work with the industry to achieve this critical mission.” — Keyvan Esfarjan i, Executive Vice President and Chief Global Operations Officer at Intel Introduction Responsible Inclusive Sustainable Enabling Appendix Our Business

19 intel.com/responsibility 2021-22 Corporate Responsibility Report Our global supply chain supports internal partners across architecture, product design, technology development, manufacturing and operations, sales and marketing, and business units, and our supply ecosystem comprises thousands of suppliers globally. Our mission is to enable product and process leadership, industry-leading total cost of ownership, and uninter - rupted supply for our customers. In addition to our own manufacturing capacity, we continue to expand our use of third-party foundries. Human Capital. Our human capital strategy is grounded in our belief that our people are fundamental to our success. Delivering on our IDM 2.0 strategy and growth ambitions requires attracting, developing, and retaining top talent from across the world. The digitization of everything is driving growth and global demand for semiconductors. Combined with the tightening labor market and economic recovery from COVID-19, this has driven a significant increase in competition throughout the industry to attract and retain talent—especially technical talent. We invest significant resources to develop the talent needed to remain at the forefront of innovation and make Intel an employer of choice. We offer extensive training programs and provide rotational assignment opportunities. We are committed to creating an inclusive workplace where the world’s best engineers and technologists can fulfill their dreams and create technology that improves the life of every person on the planet. We invest in our highly skilled workforce of through creating practices, programs and benefits that support the evolving world of work and our employees’ needs. Social and Relationship Capital. We are committed to engaging in corporate responsibility and sustainability initiatives that support our communities and help us develop trusted relationships with our stakeholders. Proactive engagement with our stakeholders and investments in social impact initiatives, including those aligned with the United Nations Sustainable Development Goals , advance our position as a leading corporate citizen and create shared value for Intel, our global supply chain, and our communities. We provide high-skill, high-paying jobs around the world. Many of these are manufacturing and R&D jobs located in our own domestic and international factories. We also benefit economies through our R&D ecosystem spending, sourcing activities, consumer spending by our employees, and tax payments. In addition, we make sizable capital investments and provide leadership in public-private partnerships to spur economic growth and innovation. We also aim to empower people through education and advance social initiatives to create career pathways into the technology industry. In addition to the direct employment Intel’s operations provide, our operational spending, capital investment, and distribution channel support additional jobs. We engage third-party organizations to conduct analyses of the economic impact of our operations, including a US impact study in 2021 that found that for every US Intel job, Intel’s economic activity in the US indirectly supports an additional 13 jobs. For more information on our social and relationship capital, see “ Stakeholder Engagement ” and “ Supply Chain Responsibility ” later in this section. Natural Capital. Driving to the lowest possible environmental footprint as we grow helps us create efficiencies, lower costs, and respond to the needs of our stakeholders. We invest in sustainability projects and set company-wide environmental targets to drive reductions in greenhouse gas emissions, energy use, water use, and waste generation. We build energy efficiency into our products to help our customers lower their own emissions and energy costs, and we collaborate with policymakers and other stakeholders to use technology to address environmental challenges. For more information, see the Sustainable section of this report. We consider numerous indicators in determining the success of our capital deployment in creating value. The above graphic shows highlights of value created through 2021. 4 See “ Non-GAAP Financial Measures ” in the Appendix. Introduction Responsible Inclusive Sustainable Enabling Appendix Our Business