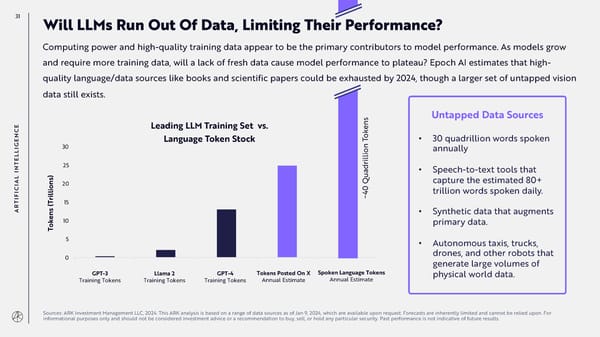

31 Will LLMs Run Out Of Data, Limiting Their Performance? Computing power and high-quality training data appear to be the primary contributors to model performance. As models grow and require more training data, will a lack of fresh data cause model performance to plateau? Epoch AI estimates that high- quality language/data sources like books and scientific papers could be exhausted by 2024, though a larger set of untapped vision data still exists. s Untapped Data Sources E Leading LLM Training Set vs. n C e N k o • 30 quadrillion words spoken E Language Token Stock T G I 30 n annually L o L i l E l i T 25 r N d • Speech-to-text tools that I a ) u AL ns 20 Q capture the estimated 80+ I C io 0 I l trillion words spoken daily. F il ~4 I r T (T 15 AR • Synthetic data that augments ns e 10 k primary data. o T 5 • Autonomous taxis, trucks, 0 drones, and other robots that GPT-3 Llama 2 GPT-4 Tokens Posted On X Spoken Language generate large volumes of GPT-3 Llama 2 GPT-4 Tokens Posted On X Spoken Language Tokens Training Tokens Training Tokens Training Tokens Annual Estimate Tokens physical world data. Training Tokens Training Tokens Training Tokens Annual Estimate Annual Estimate Annual Estimate Sources: ARK Investment Management LLC, 2024. This ARK analysis is based on a range of data sources as of Jan 9, 2024, which are available upon request. Forecasts are inherently limited and cannot be relied upon. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

Annual Research Report | Big Ideas 2024 Page 30 Page 32

Annual Research Report | Big Ideas 2024 Page 30 Page 32